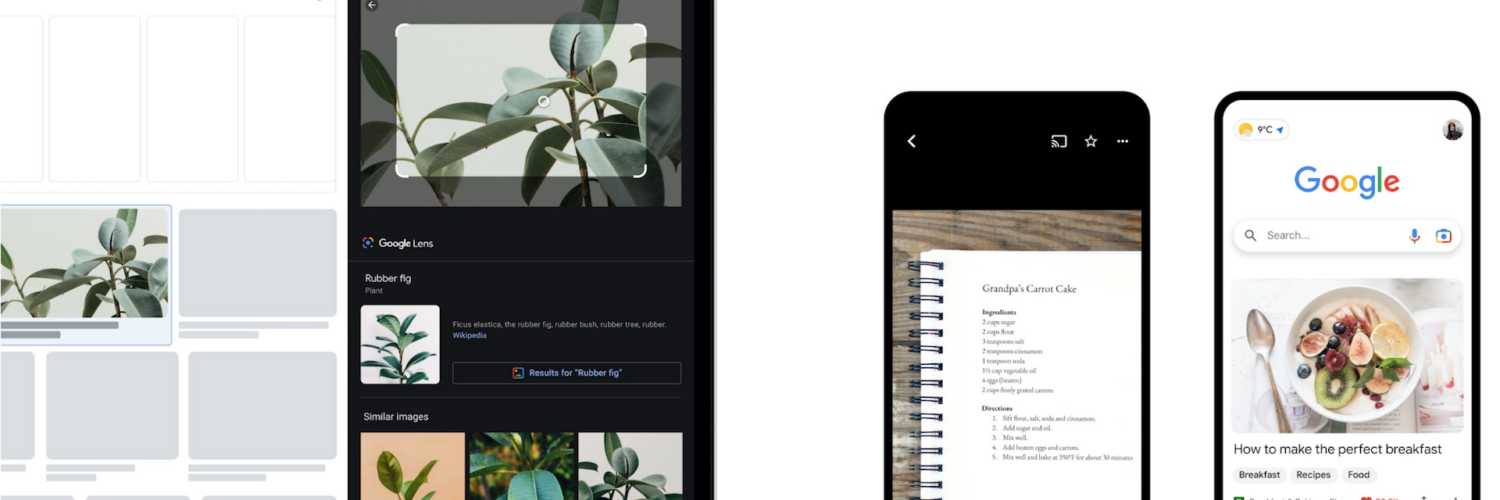

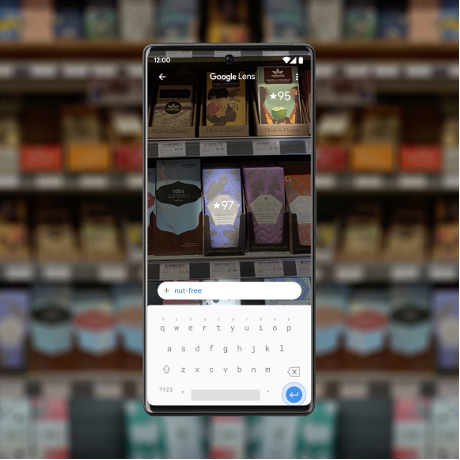

Understanding the potential limitations of text search, Google is adding more ways to find local information under the multi-search, also known as Google Lens. At the time of this writing, users can now search using text and images simultaneously, greatly improving the quality of search descriptions and thus delivering more precise search results.

Combining image and text descriptions

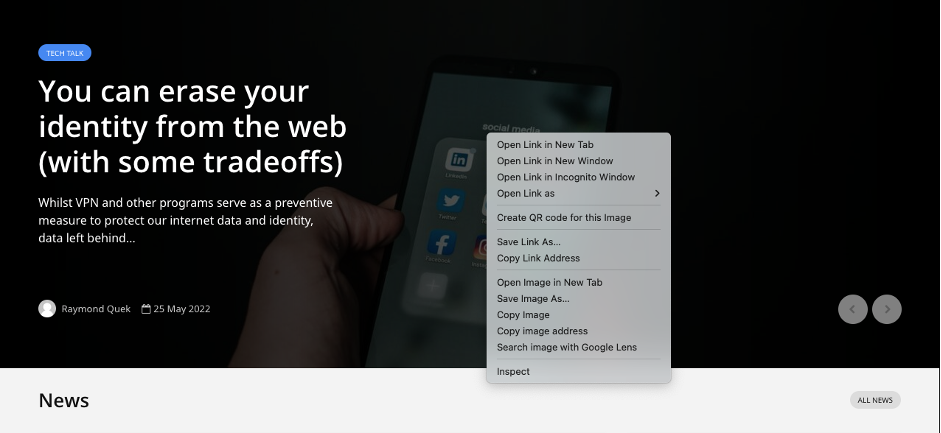

Simply open up the Google app on Android or iOS, tap the Lens camera icon and choose to snap a picture or select one from your gallery. During our tests, it even works with the Chrome browser through a right-click.

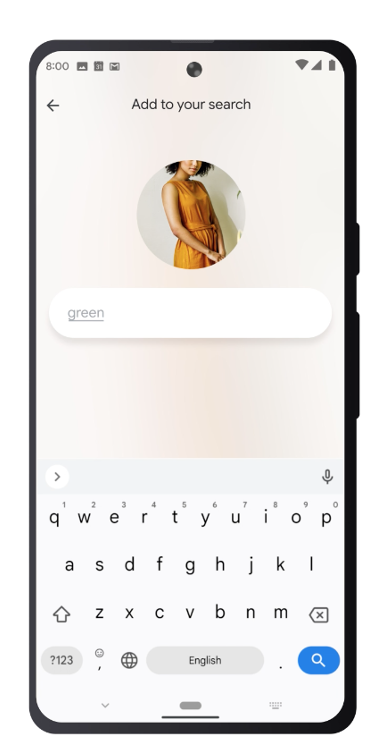

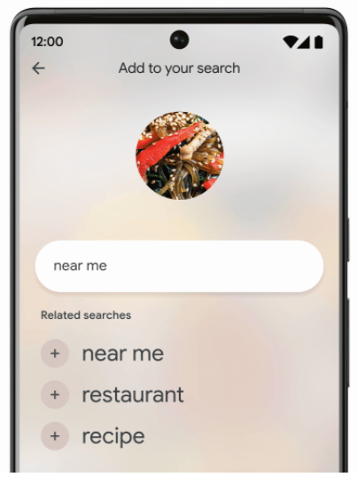

Add word descriptors, (such as colours, brands, visual attribute or type of literature) in order to narrow down your search results gathered from the picture. The search feature can even give you directions to what you are looking for in the picture (if you include phrases such as “near me”

Scene exploration – finding the object in a haystack

Able to recognise objects captured in a single frame, Google aims to recognise information about a whole scene in the near future. This advancement is known as Scene Exploration, where users can instantly glean insights about multiple objects in a wider scene as they pan their camera around.

Working in tandem with the feature mentioned above, users can also narrow down their search variety with descriptions such as ingredients if they choose to abstain or increase their consumption of certain food with nut-free ingredients.

🤓 Like what you read?

Stay updated by following us on Telegram, Facebook, Instagram or on our YouTube channel.